From not running the A/B test long enough to A/B testing too many things… Below are some of the common mistakes people make when A/B testing and how you can avoid them.

A/B testing more than one thing at once

If you’re A/B testing ad copy then don’t start messing with device bid modifiers as well. Don’t be changing the location targeting or the ad schedule at the same time or this will undermine the data.

A/B testing requires patience and control. So just stick to one thing at a time, it’s not the time for multitasking, multitasking plus A/B testing will only make you more productive at ruining more than one thing at once!

Blindly A/B testing just for the sake of it

Before you run any A/B test you need a hypothesis as to why you want to run it. Ask yourself what you are trying to discover?

For example, do you believe adding a call to action in your search ads copy will improve conversion rates? Will targeting mobile devices over desktops improve your new customer acquisition? If you think it will, why do you think that? Consider things such as whether your site has high mobile visits through organic sources but very low mobile visits from paid sources. Ask yourself and research if there could be a contributing factor to this before you run any test.

Use the data you already have to support your hypothesis before running a potentially disastrous A/B test on a live ad account.

The whole point of testing is to improve your ads performance so running tests that do the opposite of that should be avoided if they can!

Not testing at all!

This is a simple one but a cardinal sin that I’ve seen over and over again in many accounts I’ve managed. I’ve heard all the usual excuses “there’s no need” or “we already have high enough conversion rates”.

The “if it isn’t broke don’t fix it” approach works well in life for a lot of things, but digital marketing is just not one of them. The very nature of digital marketing and in particular paid advertising changes constantly. Don’t believe me? 5 years ago TikTok wasn’t a thing and the average consumer used to view content on 2 devices. Now in 2020 people are consuming content on up to 5 devices and TikTok is blowing up across the world as one of the most downloaded non-game based apps to ever exist with companies raving about how they’ve used it to reach over a billion people a month!

The only logical reason to not be running some form of A/B testing is you do not have the traffic volumes to gain worthwhile insights yet.

If you’re not A/B testing your paid advertising because you don’t know where to start…

Here are a few key things you can start experimenting with:

- Ad copy. How does adding a current promotion into your ad copy affect the click-through rates? Does adding your brand name into the ad copy improve performance or not?

- Call to action. Does the call to action incentivise the user to click or does it just say buy now? What’s the effect of changing the call to action?

- Landing page. What’s the effect of users landing on a product page over a category page? If you have product variations what’s the effect of sending people to a red jumper over a blue jumper?

- Bid strategy. Does target ROAS perform better than maximise conversions?

- Keywords your ad is targeting. Does the keyword match type affect your click-through rate or conversion rate? Could you save investment on broader match types with better negative keyword adoption than using exact match?

- Ad types. Do responsive ads get better conversion rates than your expanded text ads? What is the effect of adding dynamic search ads?

- Product price (particularly for google shopping). How does marking up/down your products price by 2% affect your sales? Could you be making better margins?

Not running A/B tests for enough time or leaving them running forever

Not running your A/B test for long enough

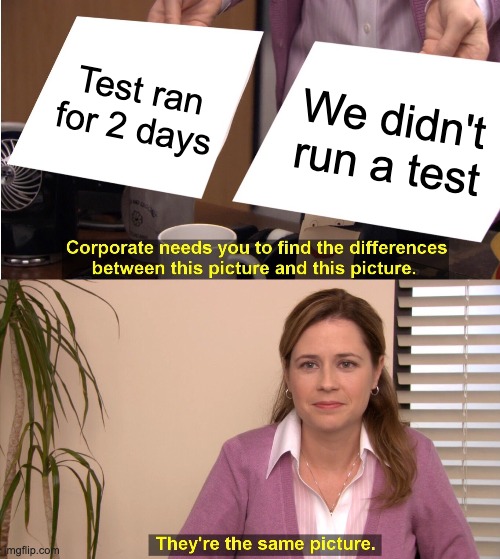

How much meaningful insight are you going to gain from 2 days of data if you only get 10,000 visitors a day to your website? I would argue not very much so it baffles me when I see people make sweeping account changes or business decisions based on this amount of data. It usually ends one way and guesses what way that is? It ends up costing them more money and even doing damage to sometimes decent performing campaigns.

It’s the PPC equivalent to holding a double-edged sword and attacking your reflection with it, you’re going to end up looking like that black knight from Monty Python.

Running your A/B test for too long

The same can be said for leaving a test running forever. If you leave it running then it isn’t an A/B test its just two things you are doing. You’re not running campaign A against campaign B to see which performs better, you’re just running two campaigns called A and B.

Okay… so how long?

How long you should run your A/B testing for depends on a number of factors such as budget, audience size, etc. As a rule of thumb, and if it’s your first attempt at A/B testing, I would recommend running your split test for at least 2 weeks before making any significant changes. Over time, you’ll learn what’s best for you and your business, always with the intention of making the decision to get the best ROI.

Measuring the wrong metrics as proof of success

Who cares if you got more clicks or more impressions when you’re trying to get more conversions? If you’re running an A/B test to see its impact on conversions then that’s the metric you should be focusing on to determine the results.

Now that doesn’t mean that the insights on the other metrics you’ve gained should be dismissed. You can apply those learnings to future tests; they just aren’t to be used as your success measurement for this A/B test.

Overlooking gremlins! (Gremlin: often an unintentional but impacting factor)

Ok so that’s not the dictionary definition of a gremlin I’ll admit but here is an example of one I’ve seen before when running A/B testing on landing pages. We saw huge increases in visits to one page over the other but a much lower conversion rate. In my head that did not make any sense! Why would the conversion rate be so much lower on the more popular landing page?

So I assessed the user journey through the page and realised that in order to progress through to the checkout, the user had to navigate through an additional page that they did not have to navigate through on the other one.

This was not by design but was just something that was a consequence of the way the page was built and it had been overlooked. Looking into the additional page’s drop off report showed us that this is where we were losing people, a whopping 84% of users dropped off on that additional page. So, of course, we removed this additional page and restarted our A/B test. The better performing page then saw much better conversion rates.

This highlights the need for additional considerations before running A/B tests.

As harsh as it sounds, make the assumption that everyone involved will make a mistake somewhere along the line so have checking measures in place beforehand to avoid these sometimes costly setbacks.

So there’s my list of the most common mistakes I’ve seen. I hope you found them helpful. If you have any to add why not let us know in the comments below. We’d love to hear about the worst gremlins you’ve ever seen too!