linke Before contacting website owners, you need to identify the links that are harming your search engine success. For a guide on how to do this, read Ben Wood’s post about how to get a reconsideration request accepted by Google. After that, follow this step-by-step guide to link removal requests.

Step 1: Write your link removal request

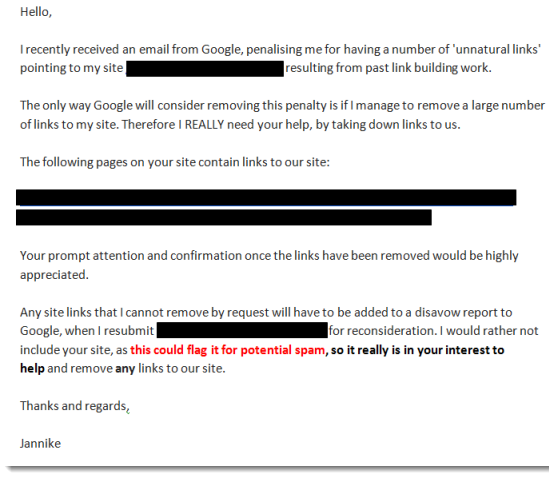

The key to getting website owners to remove links to you is setting the right tone. Here is an example of a recent link removal request:

Key features of this email are:

- It includes the exact URLs. This makes it easier for links to be located and removed

- It gives the website owner a motive for removing the link: not being seen as spammy by Google.

- It is polite. You are more likely to get a positive response by offering a helpful warning than a threat.

To see an example of a bad link request, see Susan Hallam’s post, Link Removal Requests: how not to do it.

Step 2: Contacting website owners

If you have links from hundreds, or even thousands, of websites, you will be sending a great number of emails. This is where a good Customer Relationship Management (CRM) comes in handy. We use Buzzstream, which finds available contact information for you, and automatically inserts the links associated with the recipient.

Step 3: Resending link removal requests

Plan to send your reconsideration request to Google so that you have enough time to contact website owners at least twice. This is not only more likely to get your links removed, but it looks better when sending your evidence to Google.

Wait about a week before you make contact again, to give busy site owners enough time to respond if they intend to. Be careful to only resend emails to those who haven’t replied, or you risk agitating people who might otherwise help you.

Step 4: Taking the time to reply

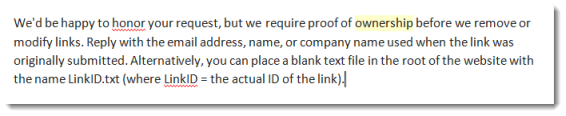

Link removal requests are not always a matter of sending emails and then forgetting about them. In my experience, some website owners want more information before removing links to your site. Some request proof that you own the website. For example, in a link removal for one client, this was one of the replies:

In these cases, providing the proof requested will usually get your link removed. Other site owners can be curious to know why a link from them is seen as unnatural, and whether Google has named their site as low quality. Providing a quick, polite answer is enough to have your links taken down most of the time.

Where to go from there

When you have sent all your emails, and waited at least one week for replies, it is time to collate your evidence and present it to Google. This is also explained in Ben’s blog post, mentioned at the beginning of this article.